Sunday, 24 April 2011

Remember - Scientists are People too

A few days ago Chris Mooney posted an interesting article "The Science of Why We Don't Believe Science" which looks at the reasons why many people deny the scientific research behind many issues, such as evolution, and global warming, which are in the public eye.I found both the article and the many quoted sources of interest.

The paper starts with a quotation by Leon Fetsinger - "A MAN WITH A CONVICTION is a hard man to change. Tell him you disagree and he turns away. Show him facts or figures and he questions your sources. Appeal to logic and he fails to see your point." and I agree.

As someone who has worked on unconventional blue-sky research I have often come against the problem - and the "man with a conviction" is a scientist in a specialty where your research is asking questions about the foundation assumptions. The difficulty is that the usual defense used to fend off alien ideas is to say "Exceptional claims requires exceptional proof" - I won't waste time on considering your logic until you have enough data to prove it. If you later come with more information the cycle is repeated - with a request for more data.

To be realistic most "novel" ideas are actually old ideas which have been dismissed for good scientific reasons - and the expert just doesn't want to be bothered with, for example, yet another proposal for a perpetual motion machine.

The problem is that there is a real problem with genuinely new ideas getting through - as converting the initial "low-level" idea to a fully scientific case, with supporting evidence takes time and money. The expert scientist with conviction may well end up stifling genuine new ideas in the process of rejecting the "time-wasters".

I will return to this issue in more detail later

Monday, 18 April 2011

I discover Babel's Dawn

As I am sure you will have realised I am a newcomer to blogging as a tool for communicating scientific ideas, having abandoned my research in disgust over 20 years ago for the reasons I am currently writing up in The History of CODIL. Not only am I now writing may own blog – but more importantly I am beginning to explore the many established blogs to see how things have progressed during the intervening decades..

One thing I have been trying to explore is the possible relationship between my old research and the study of the evolution of intelligence. One area I had never properly explored when I was developing CODIL was the links with natural language – perhaps because there was no linguistics department in the University I was working at – so the subject never wandered in that direction in the common room.

Yesterday I discovered a post Did language originate in Africa on the blog Panda's Thumb and decided to follow the story up. The original paper Phonemic Diversity Supports a Serial Founder Effect Model of Language Expansion from Africa, had just been published in Science behind a subscription wall. However I found a very useful review, Last Common Language was in Africa, of the original paper on the blog Babel's Dawn.

The idea of the “Last Common Language” got me thinking, and I found many of the other posts on the blog extremely stimulating. Over the next few days I will be revisiting this site to see where it leads. However the immediate impact was to make me think it might be useful to describe CODIL in terms of a Common Communication Language:-

CODIL can be considered as a common application independent language which provides two way communication between the human user and a novel type of information processing machine. To ensure good communication there must be “mutual understanding” and this means that the information processing machine must be able to explain what it is doing in the same language as the human uses to instruct the machine.

The interesting question that this raises is how far human intelligence resides in an innate processing mechanism, in the communication language, or in the information transferred using the communication language. I am sure I will be returning to this issue later.

Afterthought: A different view of the paper is given in Phonemic diversity decays "out of Africa"? This discusses the statistical foundations of the paper - and there can be little doubt that there are problems related to the quality of the source data and the way it has been manipulated. The difficulty is what can you do with inherently messy data - which can never give 100% confidence in the result of statistical calculations. As a scientist who has no experience of this field, but some with statistics, I see the paper as a useful model to base discussions around, and perhaps a means of stimulating the collection of more usable data.

Afterthought: A different view of the paper is given in Phonemic diversity decays "out of Africa"? This discusses the statistical foundations of the paper - and there can be little doubt that there are problems related to the quality of the source data and the way it has been manipulated. The difficulty is what can you do with inherently messy data - which can never give 100% confidence in the result of statistical calculations. As a scientist who has no experience of this field, but some with statistics, I see the paper as a useful model to base discussions around, and perhaps a means of stimulating the collection of more usable data.

Saturday, 16 April 2011

Friday, 15 April 2011

Thursday, 14 April 2011

Make sure you fill the right box

Mistakes in filing in forms can be very expensive, and you should always be certain that you are putting the information in the right box. I am reminded of this fact on April 14th as my daughter Belinda died on her birthday. I won't go into details here - except to say she might still be alive today if a policeman had not made a clerical error. He put the right information in the right box on the WRONG form which he then gave to someone who was known to be terrified of the police (for good reason because of earlier mistakes) and who was also known to be a suicide risk.

10 Important Differences between Brains and Computers

In March 2007 Chris Chatham posted 10 Important Cifferences Between Brains and Computers on the Developing Intelligence Blog. Over three years later, exploring Science Blogs for the first time I discovered the post and wrote a detailed response - without realising that the Blog was then inactive and no-one would see what I had said. I have decided to reproduce the relevant part of my comments here.

OK – some good points, and I will comment on each in turn – but first the most important difference .

The essence of a stored program computer is that in addition to the “processor” and “memory” you need a program that needs to be created – and I would suggest that the intelligence of the creator (i.e. the god-like programmer or team of programmers) always greatly exceeds the effective intelligence of the resulting programs. If you consider an evolutionary approach devoid of “Intelligent Design” the stored program model must be considered totally inappropriate before you consider any other factors.

The problem is that after the war computers took off at an enormous pace – with potential manufacturers falling over one another to try and capture the “clever programmable calculator market”. No one – but no one - had time to stop and do any blue sky research to see if there were other ways of organising electronics to build a human-friendly information processor. After 20-30 years of this mad rush to make money and build careers everyone knew that:

(1) You had to be very clever to program a computer

(2) There was a vast establishment of people whose careers and/or income depended on stored program computers and

(3) Computers were so wonderful the underlying theory MUST BE RIGHT.

People started to look at the Human-Computer Interface – but this was not fundamental research – it was technology to find better ways of hiding the incomprehensible “black box” in the heart of a stored program computer.

Over 40 years ago, as a “naive novice” in the computer industry I was looking at a major commercial system (say 250,000 customers, 5,000 product lines, 25,000 transactions a day – and a dynamically changing market.). Not knowing any better I decided that the communication chain involving programmers and systems analysts were a liability – and that one could provide a sales staff friendly system which could dynamically change with changing requirements. Of course my boss threw the whole idea into the waste paper basket and I decided to change jobs. I ended up in the Future Large Computer marketing research department with a small but imaginative computer company. I quickly realised (1) that there were many other tasks with similar requirements and (2) talks to hardware designers showed me it was easy to redesign the central processor if you had a good reason to do so. I concluded it was possible to build a “white box” information processor which could be be used by normal humans to help them work on dynamic open-ended tasks. When I mentioned this to my boss, a “TOP SECRET” label was stuck on the idea, patents were taken out, and I was put in charge of a team which, two years later showed the basic idea was sound.

So why haven't you heard of it? Well at this point the company was taken over and as my idea was incompatible with the “stored program computer “ approach I was declared redundant. I found what turned out to be a most unfriendly hole to try and continue the research but after 20 years, a family suicide and a bullying head of department I gave up “fighting the computer establishment” from sheer exhaustion, Selling the idea became harder and harder as even school children were being brainwashed to believe that computers are the ultimate technology and you need to be clever to program them. The idea that it might be possible to built a simple human-friendly processor was deemed ridiculous – as demonstrated by the millions of people whose careers were dependant on the fact that stored computers worked..

So what I will do is to answer your questions in terms of the kind of processor I was researching.

Difference # 1: Brains are analogue; computers are digital

My initial proposals – and all subsequent experiments – involved sets defined by text strings, but in theory all the processor needed was a mechanism to say if two set elements were (at least approximately) identical. It was not concerned with how these set were represented. In principal the system I proposed would be quite happy with the idea of a “cat” being represented as the written word “cat”, the sound of someone saying “cat”, the sound of purring, visual images of a cat or parts of a cat, the feel of stroking cat fur, etc., or any combinations. What is important in understanding the processes involved is not the media in which the information is stored but the ability to make a comparison in that media.

Difference # 2: The brain uses content-addressable memory

The whole basis of my proposals were based on content addressable memory – because that is how people think and what they can understand. In fact one of difficulties of my approach was that if you had a task which was best analysed mathematically in terms of a regular grid, such as a chess board, it was at a disadvantage compared with a stored program computer – which after all was designed to handle mathematically regular problems. [Comment - how relevant is the precisely predefined and unchanging rules of chess, and the fixed an unchanging dimensions of a chess board, to the kinds of mental activities needed to be a hunter gatherer.]

Difference # 3: The brain is a massively parallel machine; computers are modular and serial

My proposals are based to the idea of having one comparatively simple processor which operates recursively – i.e. it is continually re-using itself. This is an economic way of building the processor if you are working in electronics (or at least the electronics of the 1970's, which was the time the first commercial hardware might have been built if it had got that far.) Another way of looking at recursions is that you have one processor which passes subsidiary tasks on to itself. If you had millions of identical processors it could just as easily work by passing subsidiary tasks onto other identical processors to work in parallel. While I never looked at the problem of parallel working seriously my approach should be equally valid with either serial or parallel processing.

[Comment – of course neural nets (as developed by the A.I. community) are parallel – but they are not inherently user-friendly – as my approach tried to be.]

Difference # 4: Processing speed is not fixed in the brain; there is no system clock

Of course the stored program computer has an electronic clock to ensure that all components work as fast as possible in synchronisation. However I feel that is really saying no more than that a circuit board and a brain used different mechanisms to order to do what they do. In one sense this relates to the difference between serial and parallel processing (#3) in that with serial processing you must have a very robust sequence of operations which can be executed rapidly. With parallel processing you can have many multiple processes going on simultaneously and it doesn't matter if they are not perfectly synchronised as long as there is some mechanism to bring the combined results together.

Difference # 5 - Short-term memory is not like RAM

An important feature of my system was a “working area” which I called “The Facts” and which I considered to be equivalent to human short term memory. While there were some practical implementation features the difference between the Facts and any other information in the knowledge base was that the Facts were the active focus of attention. The Facts were, of course, context addressed.

However there was a very interesting feature about the Facts – the number of active items in the Facts at any one time way very small and one of the first observations when I tried out a variety of application was that the number of active items in the Facts at any one time was often around 6 or 7 [Miller's Magic Number 7?] and I don't think I every found an application that genuinely needed as many as a dozen. (In electronics terms there was no reason why I shouldn't have had a system that could work with a thousand or more such items.) The reason for the number being small that in order to make the approach human-friendly I needed to find a way that would not overwhelm the human with too many active facts. It seemed important that, to be understandable, the number of Facts items should match the number of items in a human short term memory if the human was doing the same tack in their head. In other words the processing architecture I was proposing could work just as easily with very much more complex problems in terms of the number of Facts being simultaneously considered – but humans would have difficulty following what was going on because of the limited capacity of the human short term memory.

(It should be noted that if the same tasks were implemented using conventional programming techniques they would need a very much larger number of named variables. This is due to the difference between the linear addressing of the stored program computer and an associative system. In an associative system the same things are given the same name where-ever they occur so that the processor can see they are the same. In a conventional programming language the addressing needs to be able to distinguish between related entities by giving each occurrence a different name because they are held at different addresses.)

Difference # 6: No hardware/software distinction can be made with respect to the brain or mind

You mis-identify the problem. On one hand you have a cell with information stored within. On the other hand you have a processor with two different kinds of information – program and data – stored in the memory. The real difference is that the brain does not distinguish between program and data while the stored program computer does.

My system does not distinguish between program and date – it simply stores information which can be used in subtly different ways. What I called the “Decision Making Unit” simply compared items from the knowledge base (i.e. Long term memory) with the currently activated Facts (the short term memory) and as a result the Facts might be changed or become part of the knowledge base. That was all the processor could do if you excluded (1) new information was added to the Facts from the “outside world” or (2) some combinations of Facts triggered an action in the “outside world”.

This is a critical distinction. The information in a unit of memory in a stored program computer is meaningless except implicitly in that it is defined by a series of complex predefined “program” instructions somewhere else in the computer. In the brain, and in my system, the meaning is embedded in the memory unit and so can be used meaningfully without reference to a separate task specific program.

Difference # 7: Synapses are far more complex than electrical logic gates

We are back to the biological against electronic hardware situation. The driver mechanism in my system is remarkably simple (in stored computer terms) and produces very different effects with superficially small changes in context. I suspect that synapses behave differently with minor changes in context – but I would not want to explore the analogy further at this stage.

Difference #8: Unlike computers, processing and memory are performed by the same components in the brain

I doubt that that the brain uses exactly the same proteins and other chemicals in exactly the same way for both processing and memory. If you concede this point – and that the brains cells have different mechanisms operating in the same “box” - the analogy is with a computer on a single chip – which also has everything in the same box.

Difference # 9: The brain is a self-organizing system

At the processor level the stored program computer is not designed to be self-organising – so one would not expect it to be!

My system was designed to model the way the human thought about his problems and the processing of the facts was completely automatic in that the decision making unit mechanism organised information is a way which was independent of the task. This is a very important distinction between what I was proposing and a stored program computer. The computer requires a precise pre-definition of the task it has to perform before it can do anything. My approach involves the decision making unit scanning and comparing structured sets in a way that solutions happen to fall out in the process [would you condiser this to be self-organising?]. The human brain similarly can handle information from a vast number of different contexts without having to be pre-programmed for each particular context that might occur.

The final version of my system had several features which could be called directed self-organisation of the knowledge base – allowing information to become more or less easy to find depending on its usage history. Because I was looking at a system to help humans I felt it was important that the triggering such a process should be under human control. I must admit I had never thought of implementing it in a way that it reorganised the user's information without the user being in control – If the system worked this was I suppose you might call that “Free Will”.

Difference # 10: Brains have bodies

This relates to the input and output of stimuli with the outside world – and I would not expect there to be close similarities between a biological and electronic system.

Wednesday, 13 April 2011

Where have all the children gone?

Yesterday was a bright sunny day and I looked out of the window to see two boys, about 8 years old, playing football on the play area next to our house. It made me think about a blog I had just read online about "Angry Birds".

You might be wondering what the connection is - so let me explain. When we moved into the house over forty years ago we had a problem. In fine weather there was always a crowd of children playing football and footballs were regularly coming over the wall into our garden. It got so bad that we had a word with some of the parents, and the community policeman and came to an arrangement that if the same ball came over the wall three times in the same day we would confiscate it - putting in by our front door for collection the following morning.

Perhaps I should have realized what was going to happen when about 30 years ago I had to teach a large university admissions class the elements of using a computer. Over half of them had not even used a typewriter while at the other extreme four had their own personal computers. At the end of the first year results were overall pretty satisfactory - except that the four who came with their own computers all failed. After a couple of years it seemed that we were getting a number of failures because either the student was so addicted to computer games (including writing their own) that they neglected other studies or that they were highly introverted loners with rich parents who had given them a computer to develop a marketable skill. The trouble was that in using the computer they became even more solitary and lacking in social skills. Fortunately the problem seemed to cure itself in later years - but this may have been because children showing severe computer addiction symptoms had problems earlier and so never made university entrance grades.

I had left the university by the time the play stations came in - and was not deliberately monitoring the play space next door. However thinking back it must have been about that time that we stopped getting footballs over our wall as a regular event. I don't think we have had one over the wall in the last two or three years - and this is the first time I have seen any children playing a ball game on the play space this year.

So back to the blog - which was entitled "Why Angry Birds is so successful and popular: a cognitive teardown of the user experience." The Author, Charles L Mauro, estimates that this popular game, which can be played on mobile phones and other portable devices, is played worldwide for the equivalent of 1.2 billion hours a year.

This makes me wonder what the total figure is for all the computer games, including multi-player online games. While I am sure that other factors are involved, it could well be that the lack of footballs in our garden is due to the advent of the computer game.

See also my review Keyboard Junkies, originally published in the New Scientist.

Boxing with Apathy

I really enjoyed this video which really made me think about the ways information is "boxed" to influence the ways we think.

It ends with a comment about the failure of political voting systems in Canada - which is a topi very relevant to people in the UK who will soon have to tick a box for the type of election system to be used in future parliamentary elections.

It ends with a comment about the failure of political voting systems in Canada - which is a topi very relevant to people in the UK who will soon have to tick a box for the type of election system to be used in future parliamentary elections.

Monday, 11 April 2011

Learning about Research as an undergraduate

On his blog DrugMonkey asked the question "Are University Professors Doing Their Job if Undergrads Do Not Know How Research Works?" Several people posted their experiences about what they learnt about research and the work of academic scientists and I decided to add my bit -

I became an undergraduate in a leading UK Chemistry Department 55 years ago and perhaps the best way for describing what I learnt (and did not learn) about research is best illustrated by discussing a final year project which led to my going on to do a Ph.D. in this area of Chemistry.

The project was an investigation into a recently published paper on a chemical compound called benzimidazole. The author had carried out a theoretical calculation to predict its chemical properties and had quoted 6 experimental papers which "supported" his findings while ignoring perhaps 200 papers in the literature which failed to support his calculations. Even worse four of the quoted papers were not really relevant and another was followed by a correction which had been "overlooked". My project was to investigate the 6th reference.

What I learnt at this stage: OK the paper was so wrong it could only be explained by fraud - but it soon became clear (particularly when I started on my Ph.D.) that many theoretical papers quoted experimental results without understanding the experimental limitations. In addition many experimental papers measured the properties of the chemicals they had synthesized and quoted theoretical papers without understanding the theoretical assumptions or the bounds of the measurement techniques. The result was that I became very cautious of automatically accepting papers which didn't feel right. I also set myself very high standards of proof - which meant that quite a bit of interesting research has never been published because I was not satisfied that I have proved it to my own satisfaction.What I didn't learn: I failed to understand motivations behind the scientific rat race for publication, promotion, prestige and funding. To become a successful "scientist" it is essential to put a strong positive spin on your research. It also helps to develop a strong social network- ideally including some of the "peers" that could be reviewing your papers or grant applications. (As someone who had been seriously bullied at school, my personal ambitions were low and my ability to develop effective social support networks was poor.)

The probable reference was to a first page reference to a 200 page long paper in German. The 19th century chemist had pioneered the use of a nitrating mixture of very strong acid and typically had boiled chemicals in this mixture for an hour and then reported on the result - which (if I remember correctly) was about 20% of one product and 80% a tarry mess. I found that if you took a very much weaker acid, froze it in dry ice, and added the benzimidazole I had 100% of one product almost instaneously. This showed the theory was wrong - and also that the Victorian chemist has been repeatedly applying a technique without understanding its limitations.

What I learnt at this stage: Much Science is basically data collection and analysis of the results - followed by publication. The German had developed a technique and "turned the handle" and out had come publishable results without having to wonder whether there were more appropriate techniques. Of course such data collection can be extremely important - and modern scientific equipment linked to computers can make it possible to collect vast quantities of data and have it analysed automatically to show the relevant patterns. The result can be very exciting - for instance in redrafting the evolutionary tree using DNA. The trouble was (and still is) that many post-graduate researchers (and some professors) are really acting as scientific technicians - with little scope to learn to use their imagination. I decided that such a narrow view of science was not for me.What I didn't learn: The politics behind interdisciplinary research could be very difficult because of the need to deal with people who academic credibility was based on a very deep knowledge of a very narrow topic.

Finally there was an additional unplanned spin-off from the project in that I was able to look at the underlying theory and suggest reasons why the mathematical model that was used in the paper was very susceptible to very minor variations in the original assumptions.

What I learnt: Never be afraid to question the assumptions underlying any particular area of science if you feel that they are unsound or incomplete.What I later learnt: If you find what you believe are good reasons for questioning the assumptions you will be told that "Exceptional claims need exceptional evidence". The problem is that exceptional evidence needs exceptional funding to gather and to get such support you have to negotiate a way through the the establishment peer review framework.

What I failed to realise was that for the average research scientist the safest career option was to select a narrow field where it was possible to gather large quantities of new data by turning the handle of a suitable technique and get plenty to publish without having to use too much imagination. If you try to explore original and imaginative ideas you either become very successful, but in most cases you will fall flat on your face and be trampled by those who put the academic rat race above scientific ideals.

Some of you may think that this is a rather cynical view of scientific research - but looking back over the last 50 years I am sure I would have been far better off in financial terms if I had not decided to dedicate my life to scientific research.

Sunday, 10 April 2011

Catch 22 for the elderly with bad knees

Yesterday I strolled down the town to do some shopping when I met a friend hobbling along the street. She had just come from her doctor with some bad news. From April 1st the new rules had been introduced in Hertfordshire covering knee and hip replacements and because she was over-weight she would not be put on the waiting list until she had lost sufficient weight. |  |

This is a catch 22 situation for many elderly people whose joints are being to wear out. Walking becomes difficult, so you walk less to ease the pain, and the resulting lack of exercise means you put on weight. The extra weight puts strain on your legs - so the joints wear out faster and you become even less mobile. If you get depressed over the situation you may take to compensatory eating - such as nibbling biscuits, and the situation deteriorates further. |

Having been the official public observer on a National Health Service commissioning board I know how such things work

It's all a slight of hand resulting from the way Government statistics is calculated. Hospitals have to fill in a box in their returns showing how long their waiting lists are and may be penalised if they don't meet the targets. No problem - all you do at the local level is to redefine the criteria for the operation to restrict the numbers of patients who "need" treatment now.

The result is that the Government can claim that, despite the cuts, all the people who "need" the operation get it. The hospital saves money by doing less operation and meet their waiting list targets. They can all claim that the N.H.S. is not "going to pieces" - just look at the excellent statistics to prove it.

What is actually "going to pieces" are the knees and hips of those who are now on the unofficial waiting list to get on the waiting list. They will stick in the queue until they have lost weight, become even more seriously disabled, or (a great saving never to be admitted in public) have died.

Welcome to My Blog

Our lives are all constrained, to a greater or lesser extent, by the mental and physical boxes in which we find ourselves.

Welcome to My Boxes Chris Reynolds |

|

Four years at the unconventional Dartington Hall School provided an escape from bullying. and taught me to think for myself. |

|

| I Love Science I studied Chemistry at University College London and Exeter University but almost anything covered by the New Scientist magazine interests me.. Expect me to ask some interesting questions on my blog |

In 1965 I got sucked into the computer industry. I worked on one of the biggest Leo Computer systems in the UK. Haven't things changed! This computer was less powerful than my mobile phone. |

| In 1967 I discovered CODIL, which was a way to build "computers" which understood people. The "high priests" of the Computer Industry didn't want to know!!! |

I spent 17 years as Reader in Computer Science at Brunel University researching and teaching about human computer interaction. The University was the location for the film "A Clockwork Orange" |

| My daughter Lucy became trapped in a "box" nick-named the Muppet House and killed herself a year later. |

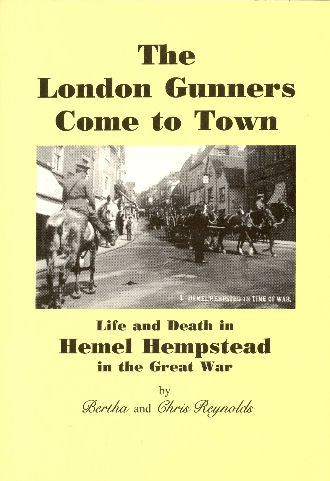

I could not stop doing research, so in retirement I switched to local and family history and wrote a book about Hemel Hempstead.  I now provide help and advice on the Genealogy in Hertfordshire web site |

| |

And to relax (when I feel trapped by the mental and physical boxes that surround me) I enjoy walking the countryside taking photographs. You will find a number of mine on Geograph, such as this one at the College Lake Nature Reserve, near Tring, Hertfordshire. |

How the blog develops will depend on what catches my attention - and what reactions I get from people posting comments. Many of the blogs will place an emphasis on the boxes that trap us - and a significant number will relate to the ways computers and technology affect our lives.

Enjoy!

Subscribe to:

Comments (Atom)